Fundamental research thrusts in the lab span theory and algorithms that aim to address the limitations of AI in practice.

Here at the lab, we believe that the most useful concepts in AI and ML reveal themselves through constant exposure to domain-specific challenges and constraints.

It is these limitations that force us to get creative and think about how to address the hurdles that stand in the way of widespread adoption of AI technology.

Our goal is to assuage these pain points to best meet the needs of potential users of AI technology, whether they be subject matter experts, developers of AI systems, or general users.

To get a flavor for the type of work happening at the lab, here is a selection of five broad application areas where we are applying our research.

Informed Machine Learning

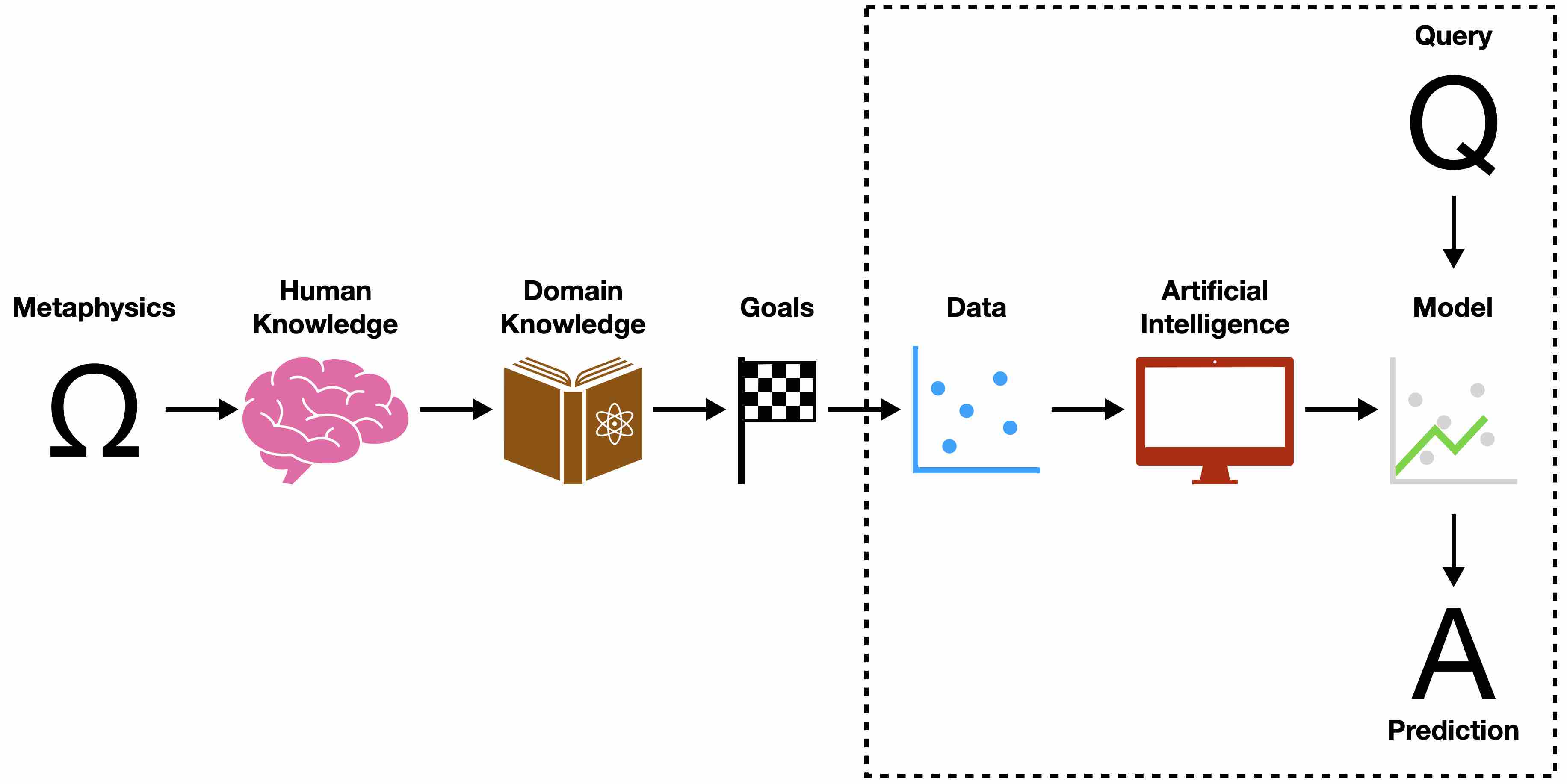

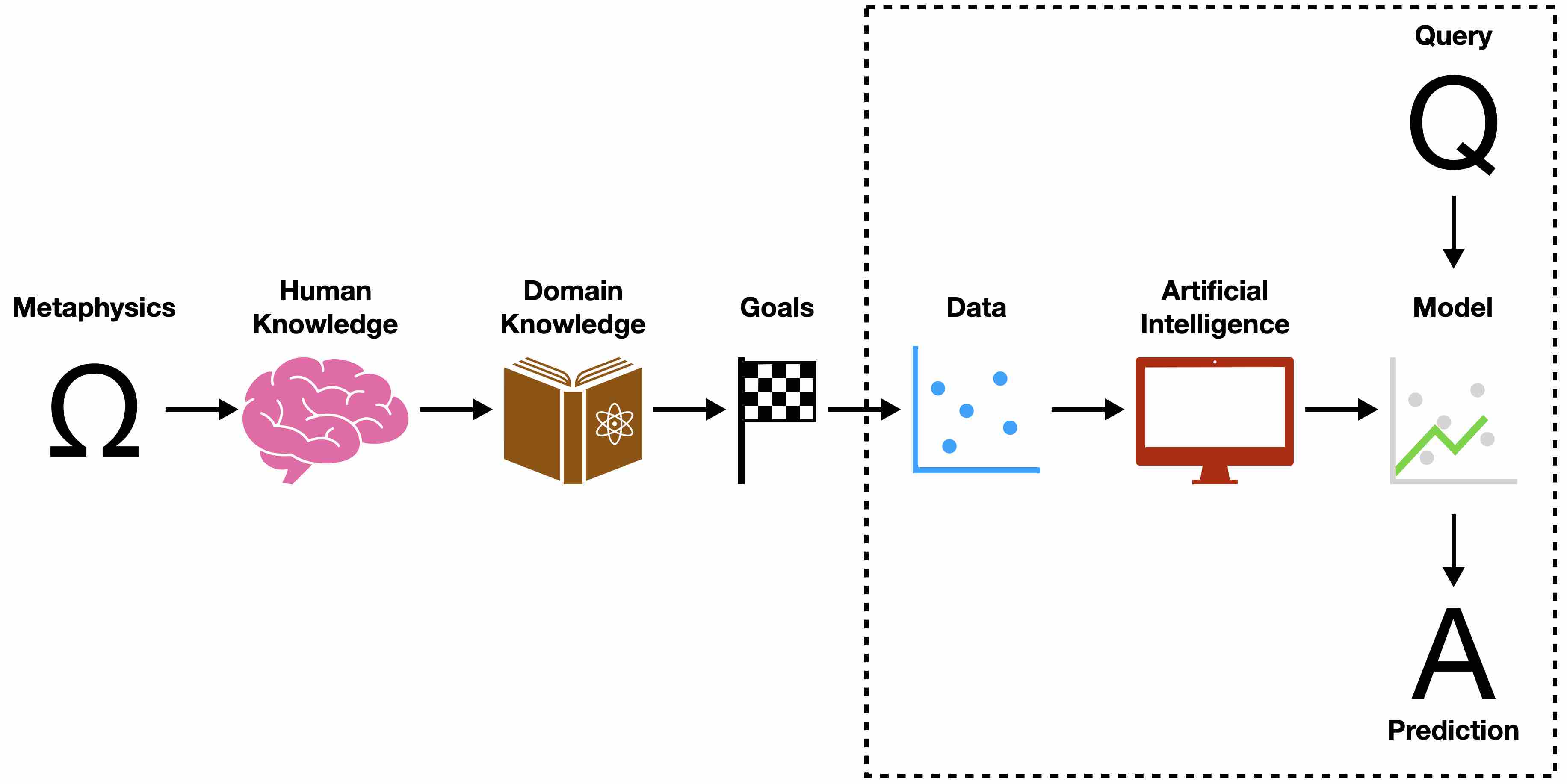

Auton Lab research frequently involves ways to incorporate expert knowledge into AI systems. We recognize that simply studying the data, model, and predictions is an unnecessarily limiting perspective because these factors are informed by upstream conditions, knowledge, and design choices. Figuring out how to incorporate this upstream knowledge into AI systems represents a huge opportunity to guide AI to learning data-driven policy that also respects common sense norms. Work in this context ranges from research on how to effectively have experts label vast amounts of data, to incorporating feedback in active learning frameworks, to formal verification of model adherence to domain-specific constraints and design specifications. We take AI outside the cozy spot of data-driven approach. Standard AI relies primarily on what can be learned from data, however, data is just a limited projection of reality. Auton lab is working on multiple exciting avenues to make AI and ML smarter.

Highlighted Work

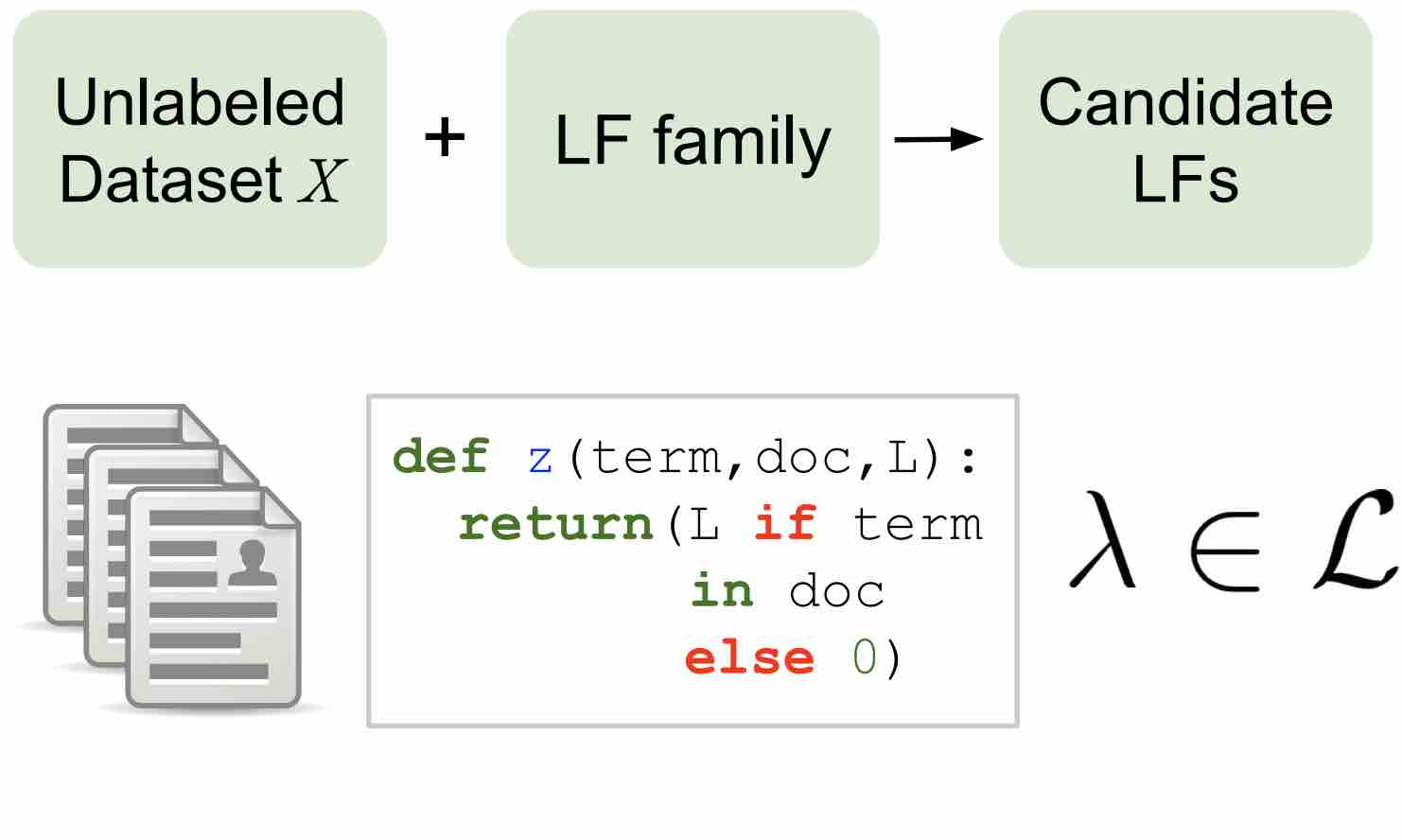

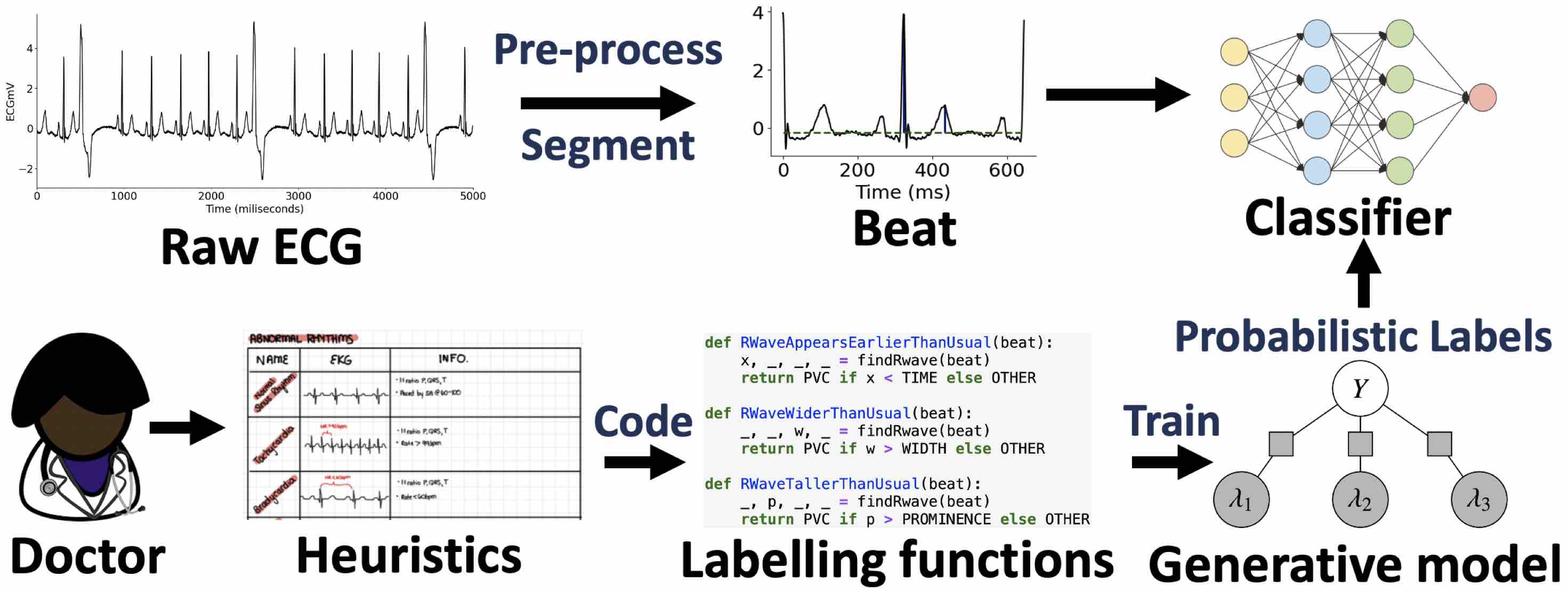

Weak Supervision

Many state of the art models have a voracious appetite for labeled data, which is hard to provide in contexts where subject matter experts are the only people capable of providing annotations. The weak supervision paradigm replaces labeling of individual data samples with the creation of labeling functions. Auton lab work expands this paradigm to increase the efficiency and flexibility of the data programming framework.

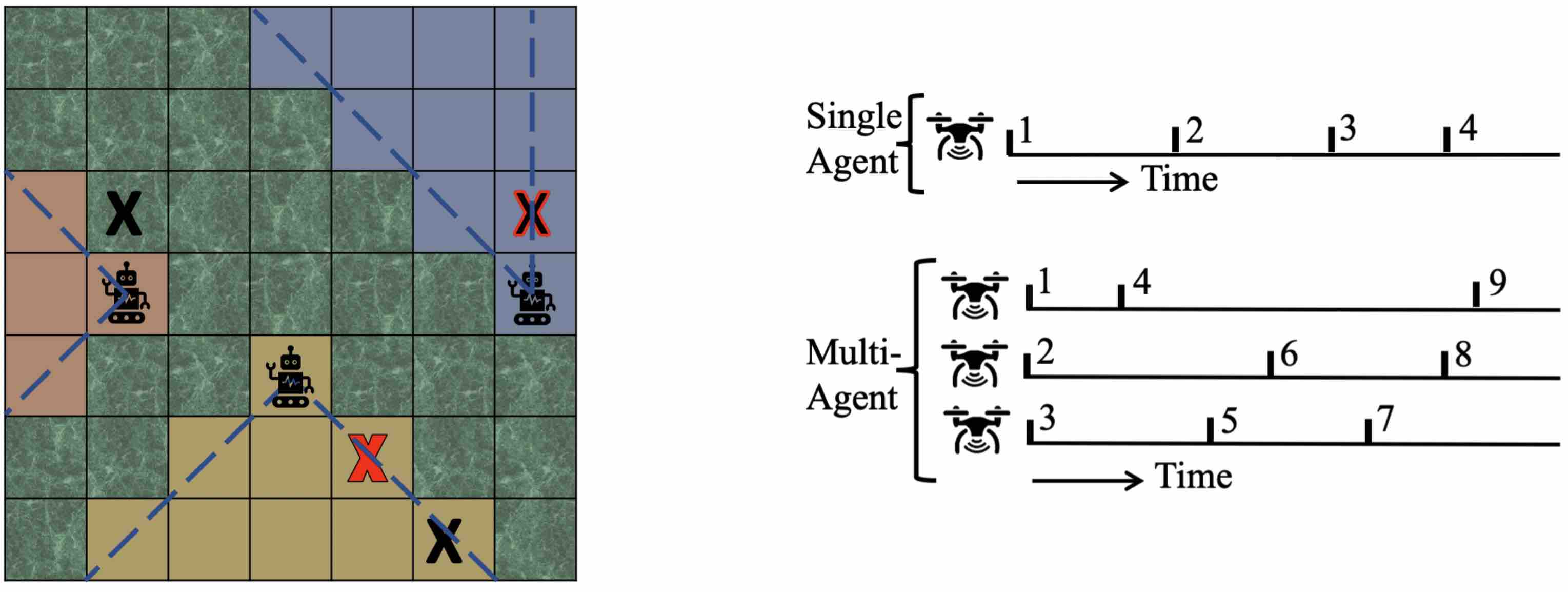

Active Search

Multi-Agent Active Search (MAAS) refers to the problem of coordinating a team of agents to actively search for a sparse set of targets in an unknown domain. MAAS applications include robotic search-and-rescue, gas-leak detection, military reconnaissance and disaster relief. Despite the recent interest in MAAS technologies, pilots often face significant coordination challenges, preventing the effective deployment of drones in the field. Consequently, Auton Lab focuses on developing algorithms and theory to help enable next generation MAAS applications.

Principle-Driven AI

Leveraging domain knowledge; leveraging first principles of physics/chemistry/biology; leveraging common sense and demonstrating common sense. The utility of machine learning is that it will learn useful policies from data, however it is an open question of how to incorporate domain-specific constraints into the training process. Auton Lab works to help SMEs to codify their knowledge in a way that informs the model fitting process, including physics informed algorithms.

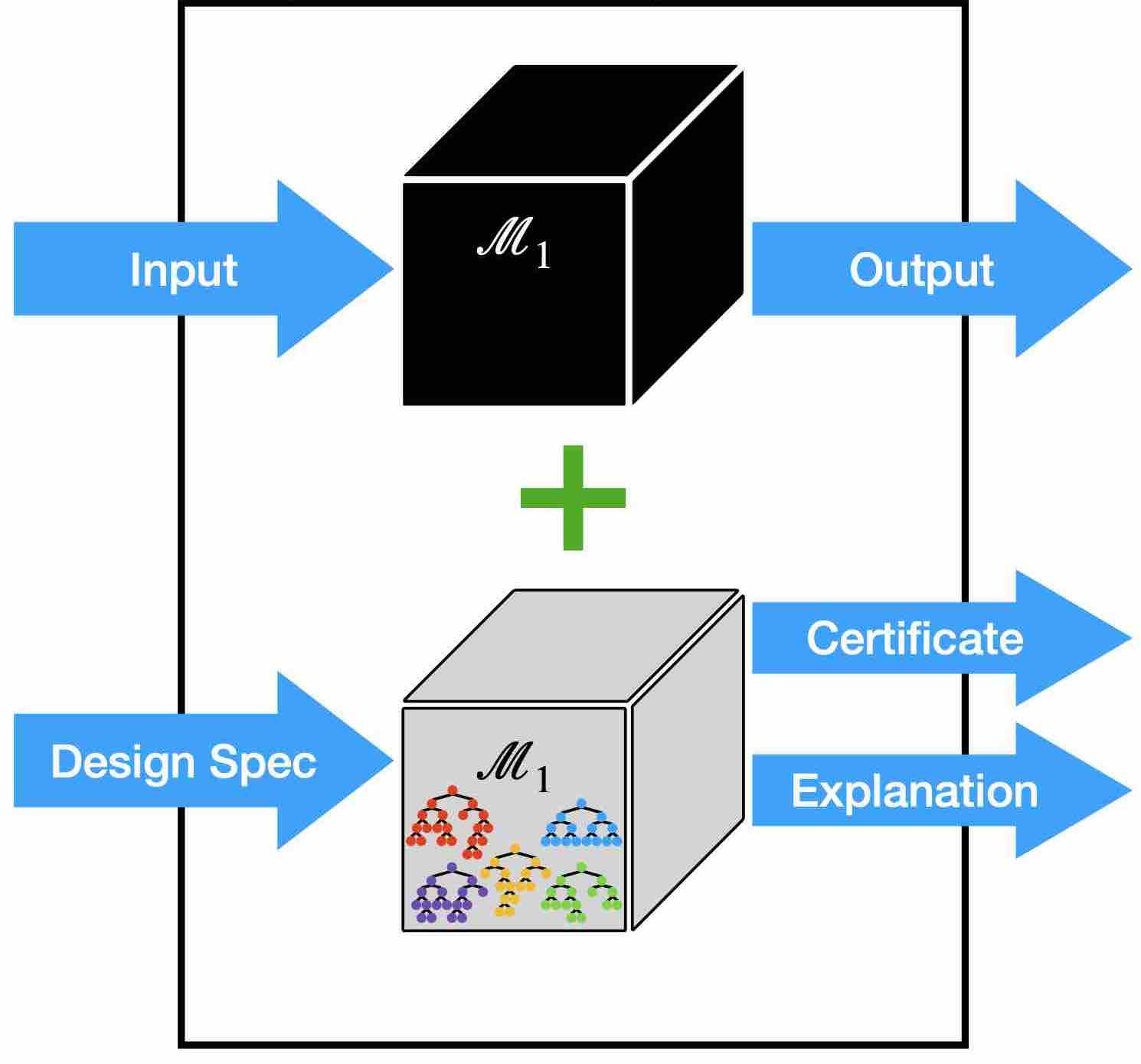

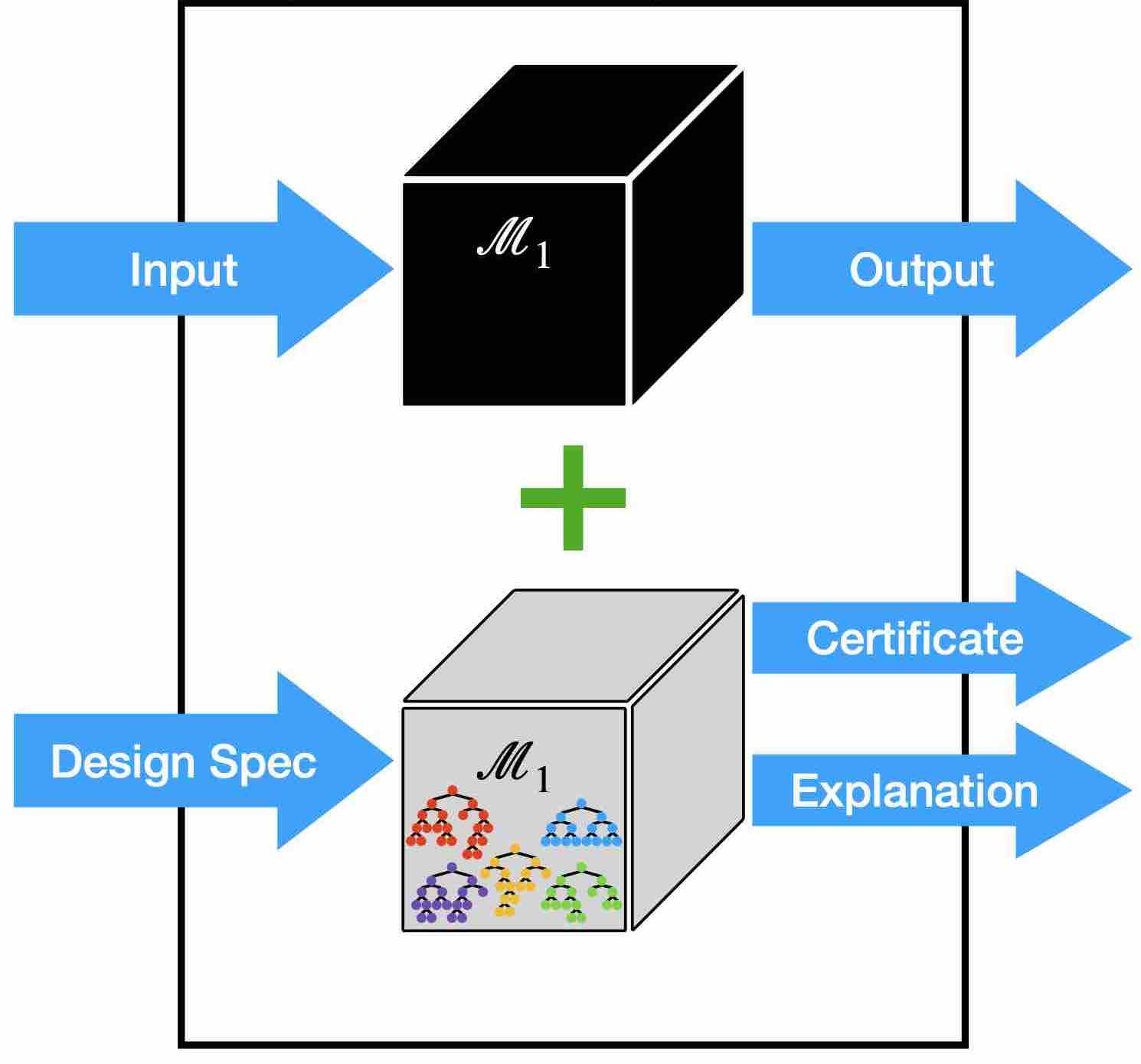

Introspective AI

Models should be conscious of their own decision logic, and able to admit what they can and cannot do. Through formal verification, we can provide formal guarantees whether a trained model exhibits fair decision making globally, whether a model exhibits robustness to imperceptible perturbations on input, and whether a model adheres to safety-paramount engineering constraints. In cases where these properties are violated, returning explicit environmental conditions that cause the violation lets the model admit when it can or cannot be trusted. This information may also help the model learn from its past mistakes, iteratively producing a model that better adheres to critical design specifications.

Knowledge Discovery

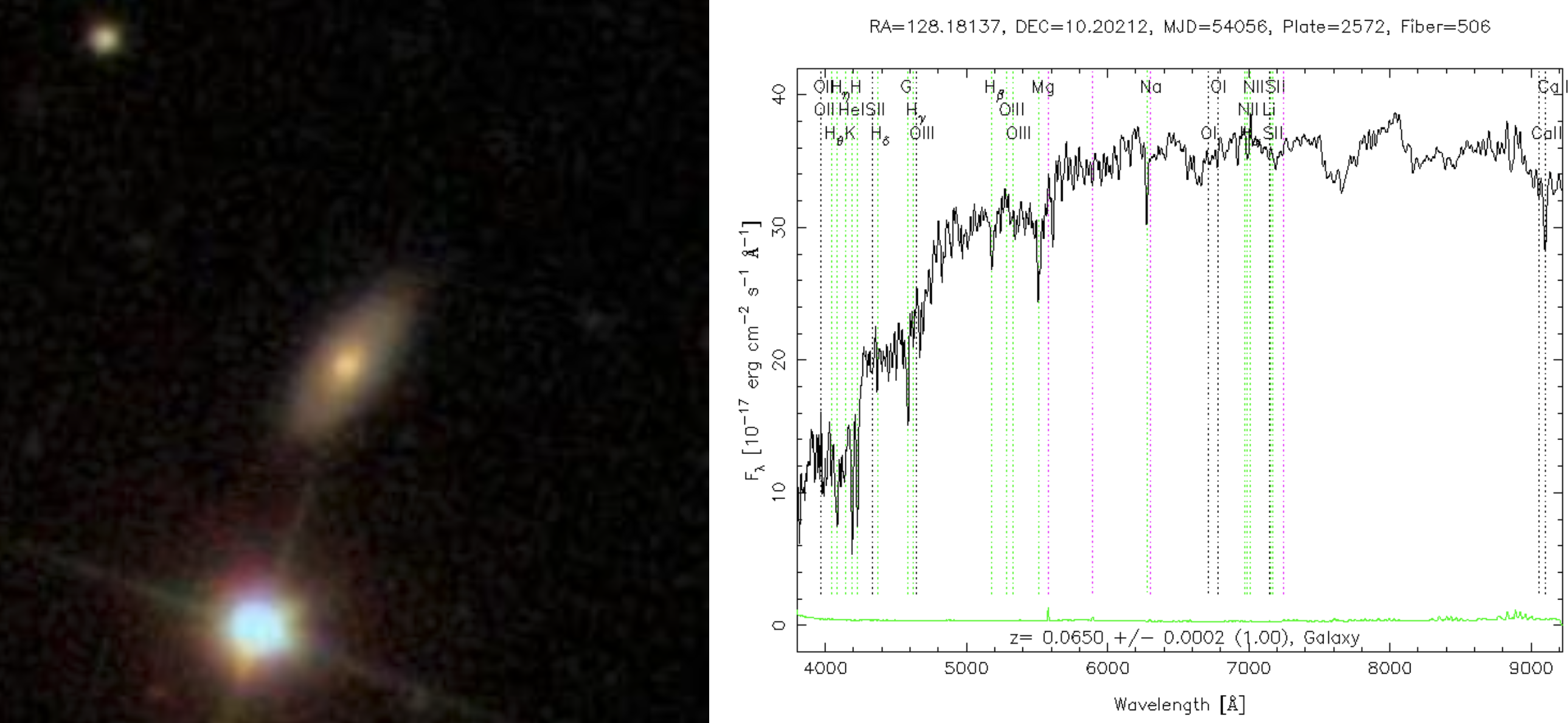

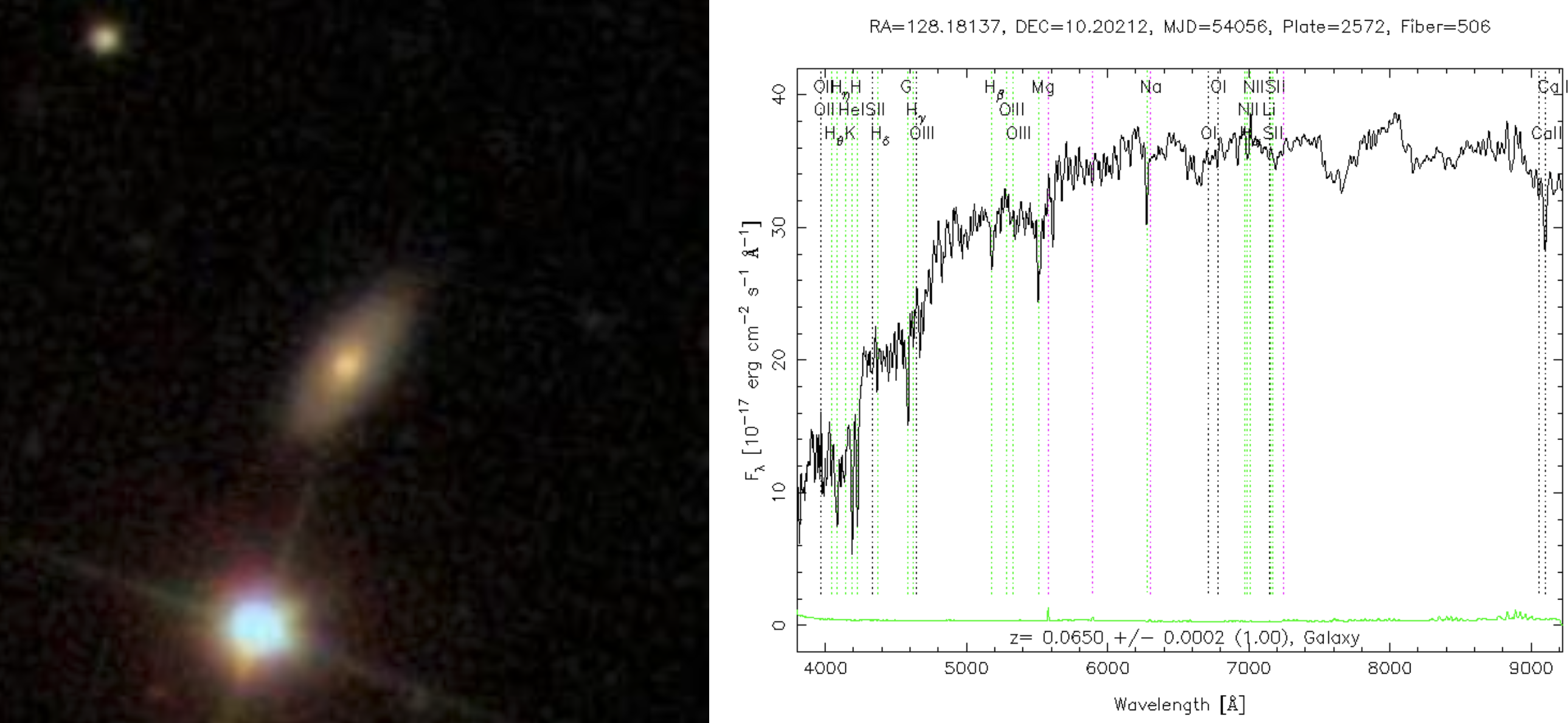

Using AI to discover structure in data. This structure can be leveraged to learn something new about the data itself, furthering the science of the field. Insight can also be used to inform design decisions and engineer the best model possible. Intelligibility of the discovered knowledge is critical, as it facilitates communication of results and ensures that all insights generated are actionable.

Highlighted Work

Data Mining

Leveraging hidden structure in data informs design decisions in modeling paradigms and expands the set of possibilities for building useful models. Uncovering patterns that are obfuscated by class imbalance, or assumptions of homogeneity. Data-driven discovery of formal specifications that could be useful in demonstrating the trustworthiness of a model to a human user.

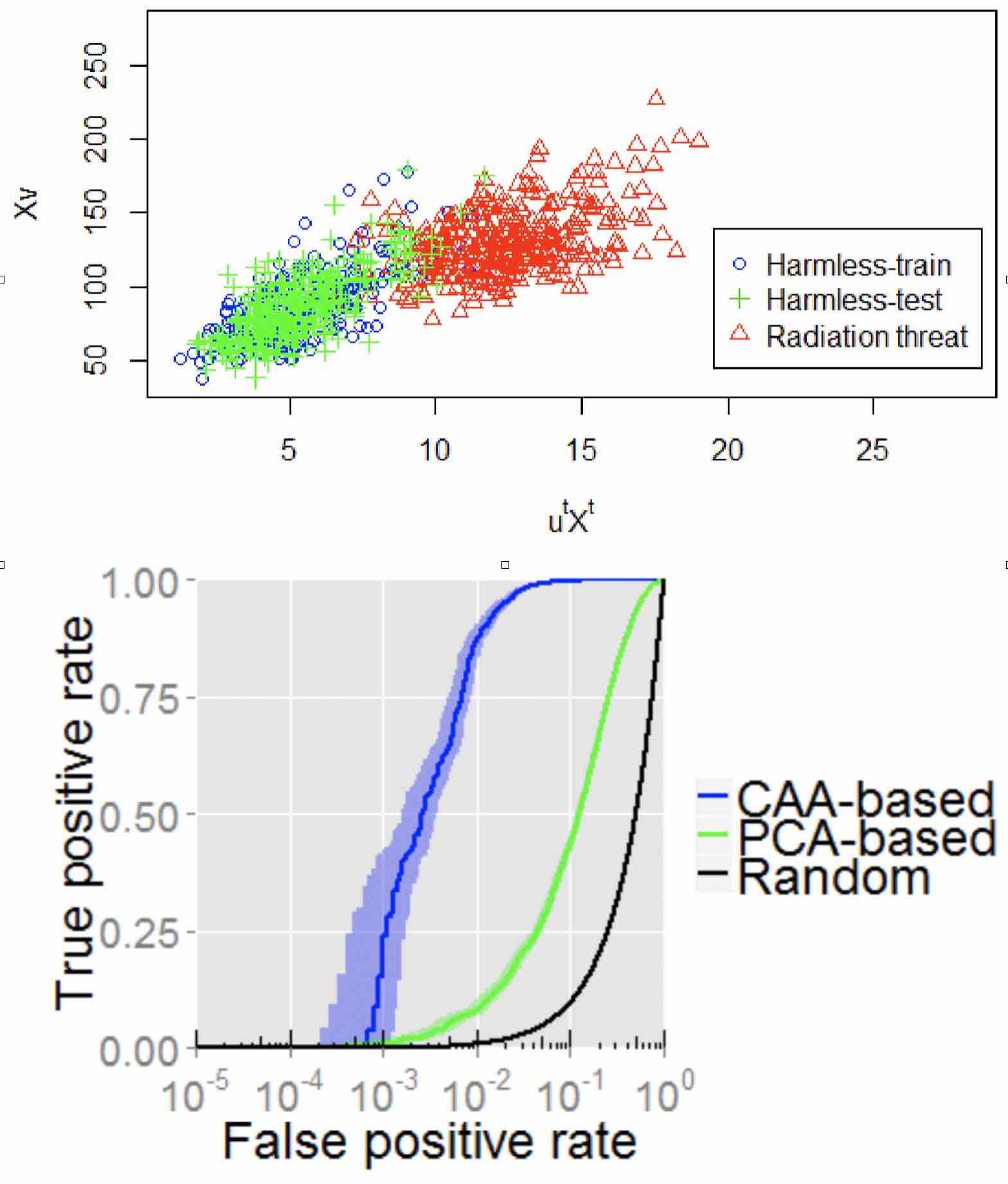

Anomaly Detection

Capturing rare events in data also provides leads for investigation to understand the environmental conditions under which a model performs well or poorly. Data-centric anomalies describe events that are rare and Model-centric anomalies describe fault modes. Combining data and model centric anomaly detection not only allows us to talk about low error rates, but also the ways in which those rare events will manifest, circumventing the need to discover faults empirically where otherwise easily preventable harm can be inflicted.

Pragmatic Deep Learning

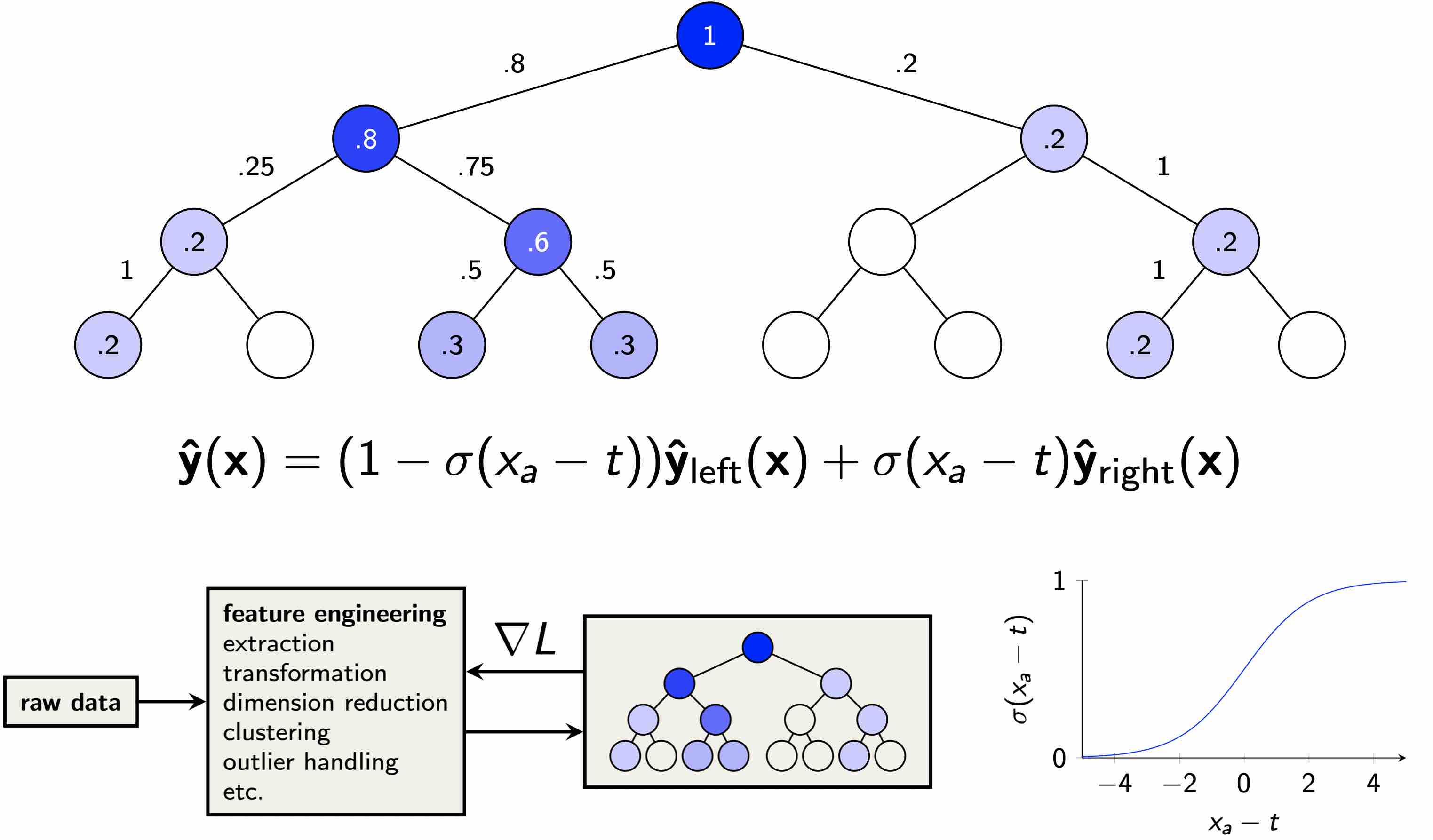

Some of the contemporary limitations of deep learning can be traced back to the fields early days. At Auton Lab, we try to unlock the same benefits of deep learning with novel approaches that reduce the complexity or the appetite for data. Additionally, a goal is to increase interpretability by keeping models as simple. This thrust involves building new model classes that are capable of deep learning while possessing structural advantages that make the methods applicable in select real-world contexts.

Highlighted Work

Deep RL for Nuclear Fusion

Nuclear fusion is one of the most exciting prospective energy generation technologies available to humanity. By replicating the nuclear reaction that takes place in the sun, one could extract millions of times more energy per gram of fuel than by using traditional combustion methods. The most promising technologies for fusion require a plasma to be magnetically confied and heated to extreme temperatures. Because the dynamics of plasma are complex, they are difficult to model and simulate, and it is challenging to design controllers which can achieve the neccesary conditions for fusion. Auton Lab uses AI to learn dynamics models and value functions for plasma control problems that can help us achieve successful controlled fusion. We tackle problems like regulating the plasma pressure, predicting potential 'disruptions' in confinement, and safe shutdown of the machine. We have demonstrated in experiments on the DIII-D tokamak in San Diego that AI can successfully predict the evolution of plasma dynamics and find controls that can successfully achieve goal plasma states. As projects like the ITER tokamak in France become operational, we hope that our work can inspire additional applications of AI to achieve fusion reactions with positive net energy.

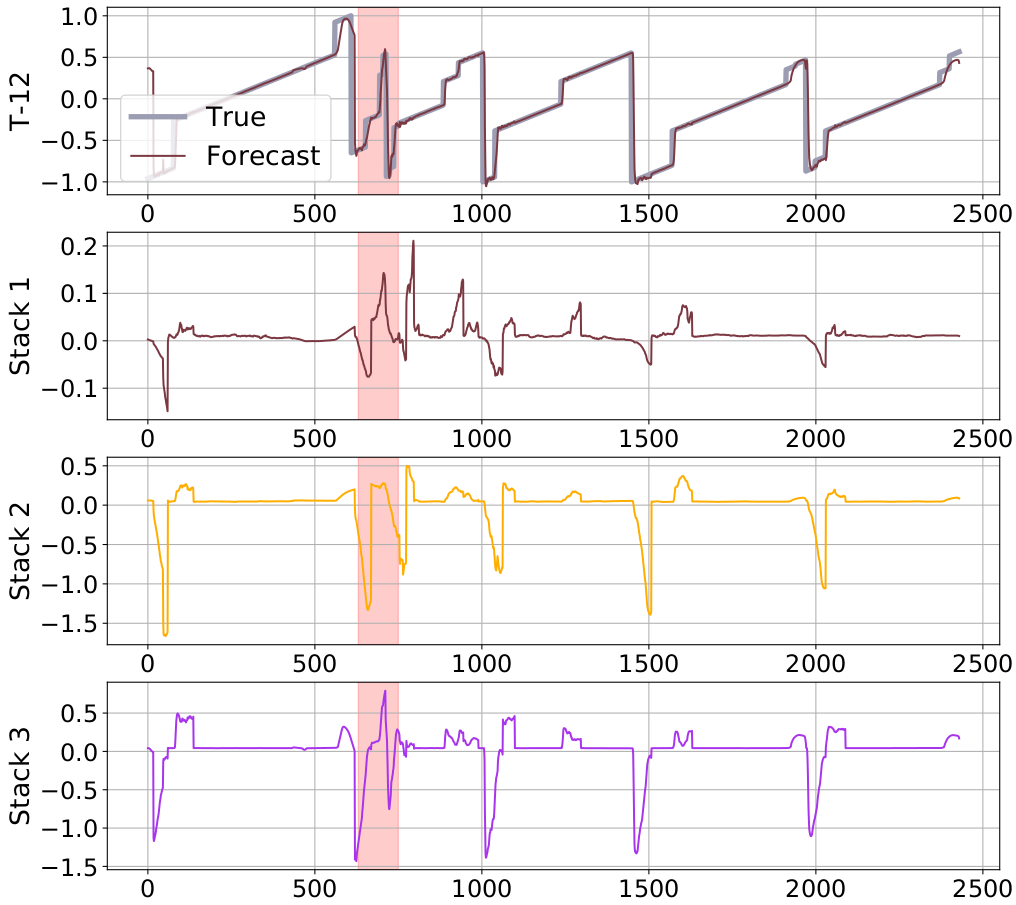

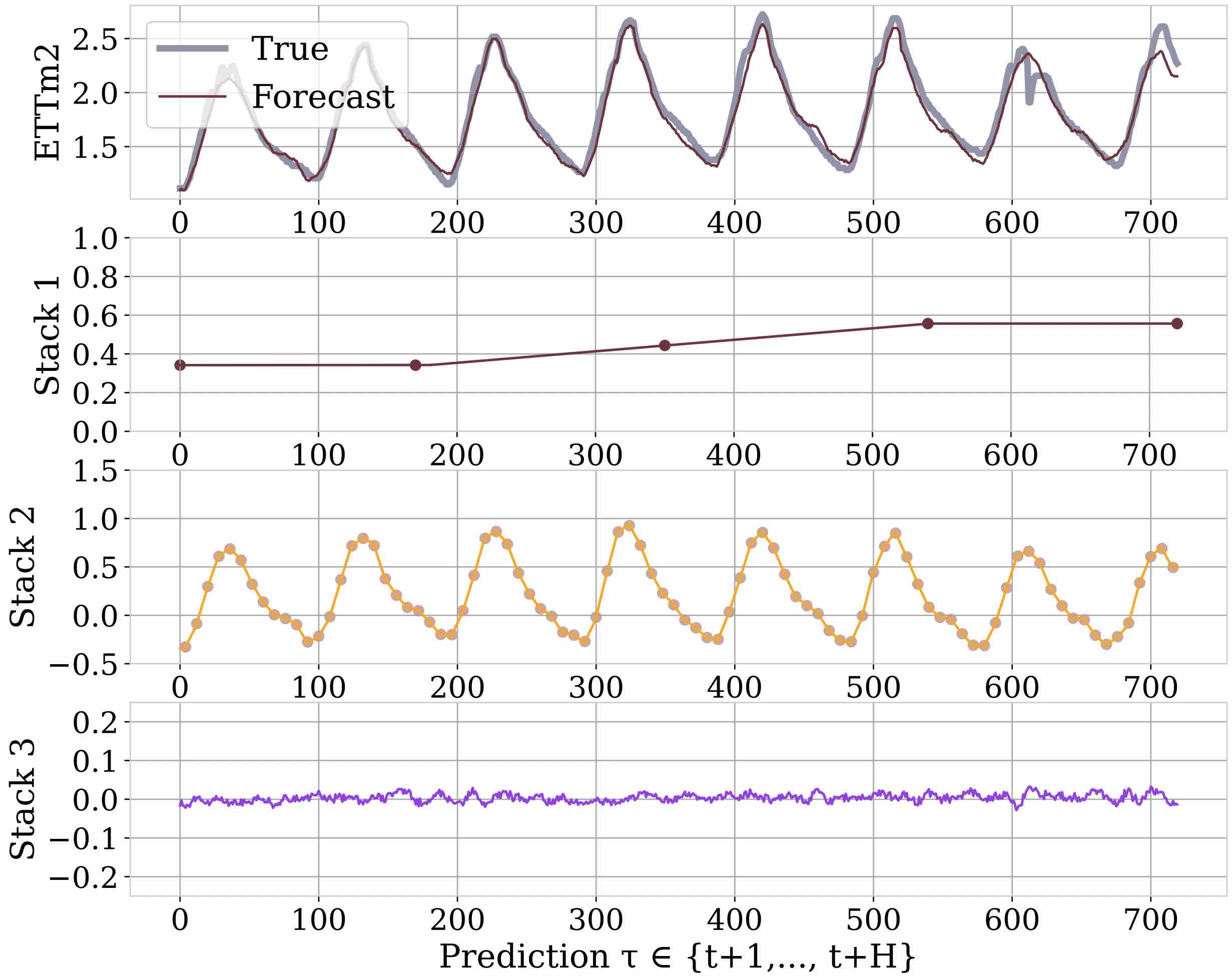

Hybrid Forecasting

Multi-variate time-series forecasting, classification, and anomaly detection. Incorporating exogenous variables to reduce forecasting error. Learning heirarchical, structural elements of data to aid in forecasting.

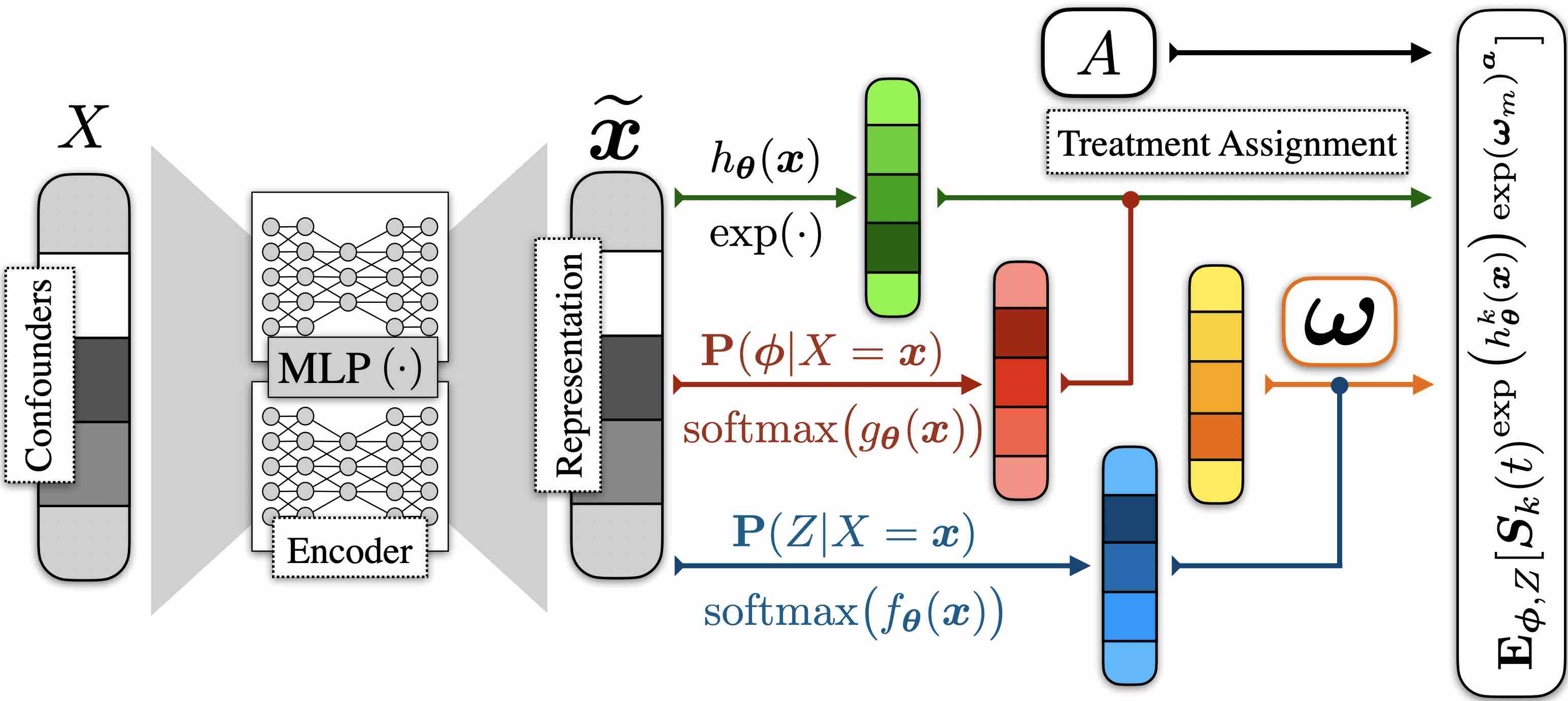

Deep Survival Analysis

Predicting time to failure under censored outcomes. Discovering effective interventions under assumptions of heterogeneity.

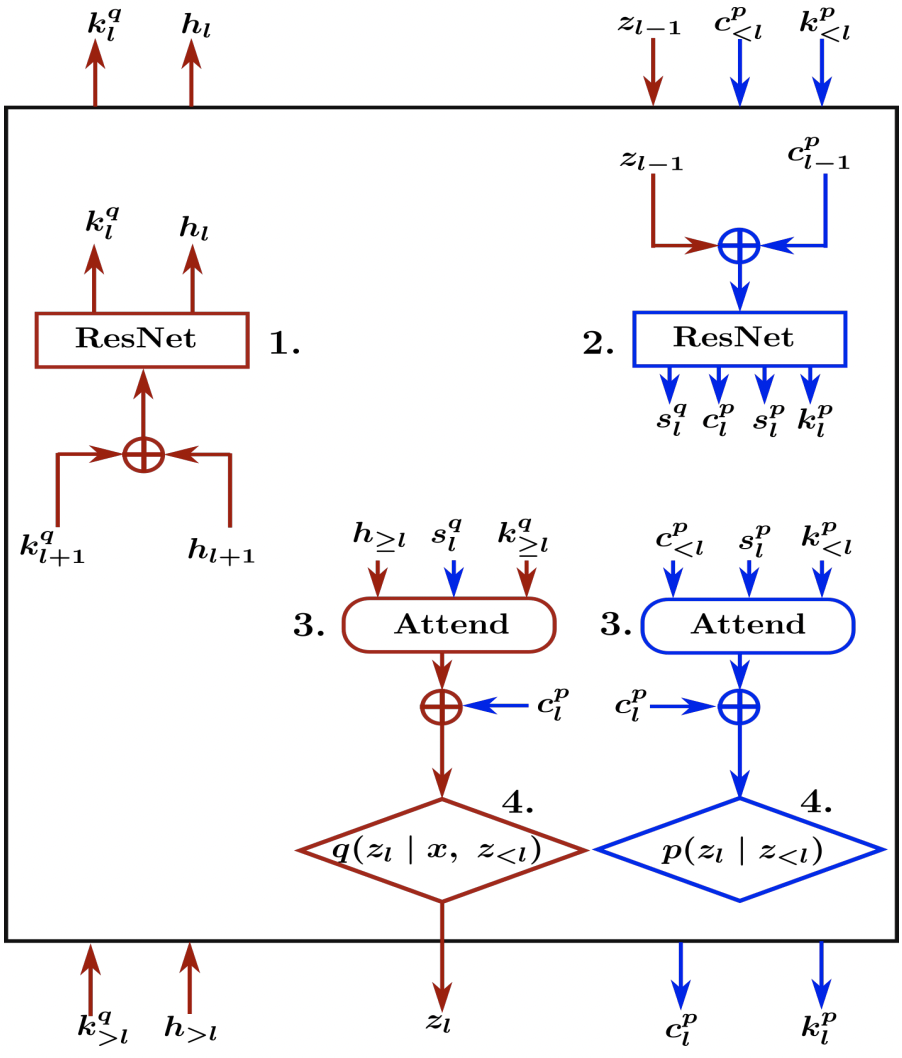

Bayesian Deep Learning

Auton Lab works on methods to build autoencoders with fewer parameters than deep network alternatives, trading fidelity for scalability. This work enables accurate forecasting for long windows into the future. Variational autoencoders break the assumption of independence among subsequent layers in the network. That reduces complexity of the model but maintains performance. Reduces time to train, resource consumption, etc.

Genetic Curriculum for Deep Reinforcement Learning

Auton lab focuses on using curriculum learning and genetic algorithms to sample-efficiently train an RL agent against the long tail of scenarios (last 10% of scenarios that are difficult to train). One approach to achieve robustness is to use adversarial agents to inject noise during training to steer the exploration towards these challenging scenarios. However, this often converges to worst case situations in which the protagonist cannot learn and requires expert supervision to avoid this issue. Instead, we use genetic algorithms to generate adversarial scenarios. Generated via genetic algorithms, these scenarios are similar to each other and can work as a curricular RL where skills are transferred between similar tasks of varying difficulty levels. Empirical results show that our algorithm results in RL agents 2 ~ 8 times less likely to fail a task without scrificing performance (average cumulative rewards).

Offline Reinforcement Learning

Auton Lab works on leveraging deep reinforcement learning for motion planning in autonomous driving. While traditional motion planning approaches rely on handcrafted heuristics and brittle, hard-coded policies, we’re interested in how reinforcement learning can be used to automate this process by learning from data. We have investigated how online reinforcement learning algorithms can be deployed on autonomous vehicles in simulation, and have used state-of-the-art on-policy and off-policy approaches to solve various simulated driving benchmarks. We are exploring how we can continue to scale these approaches to tackle more complicated scenarios and how we can accelerate training by exploiting parallelism. Additionally, the lab is focused on offline reinforcement learning, which can learn policies from large datasets of previously collected trajectories without requiring on-policy samples. This work is crucial for bringing reinforcement learning approaches to safety-critical real-world domains like autonomous driving. We develop both model-based and model-free approaches and explore their effectiveness in settings with various degrees of data quality."

Making AI Usable & Accessible

These research thrusts address the pain points of applying ML in practice. AI research that is informed by constant exposure to real-world, domain-specific constraints including resource limits, privacy considerations, and user trust & understanding.

Highlighted Work

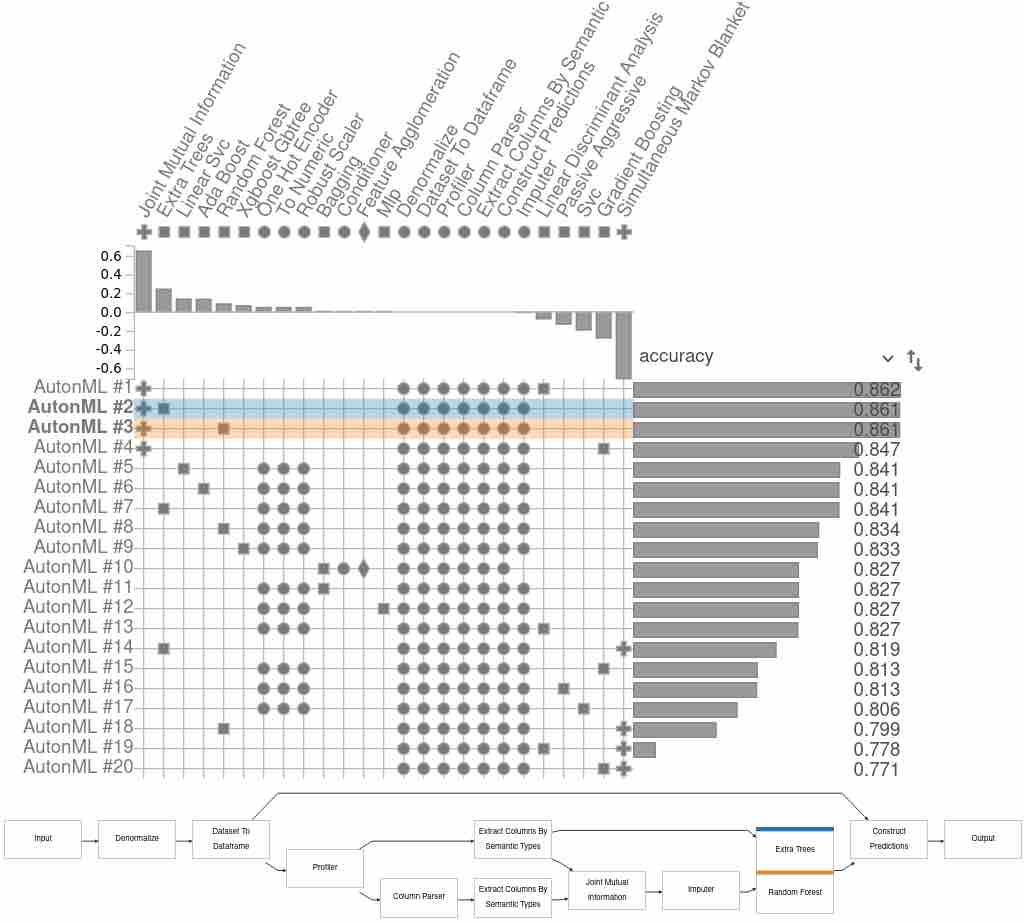

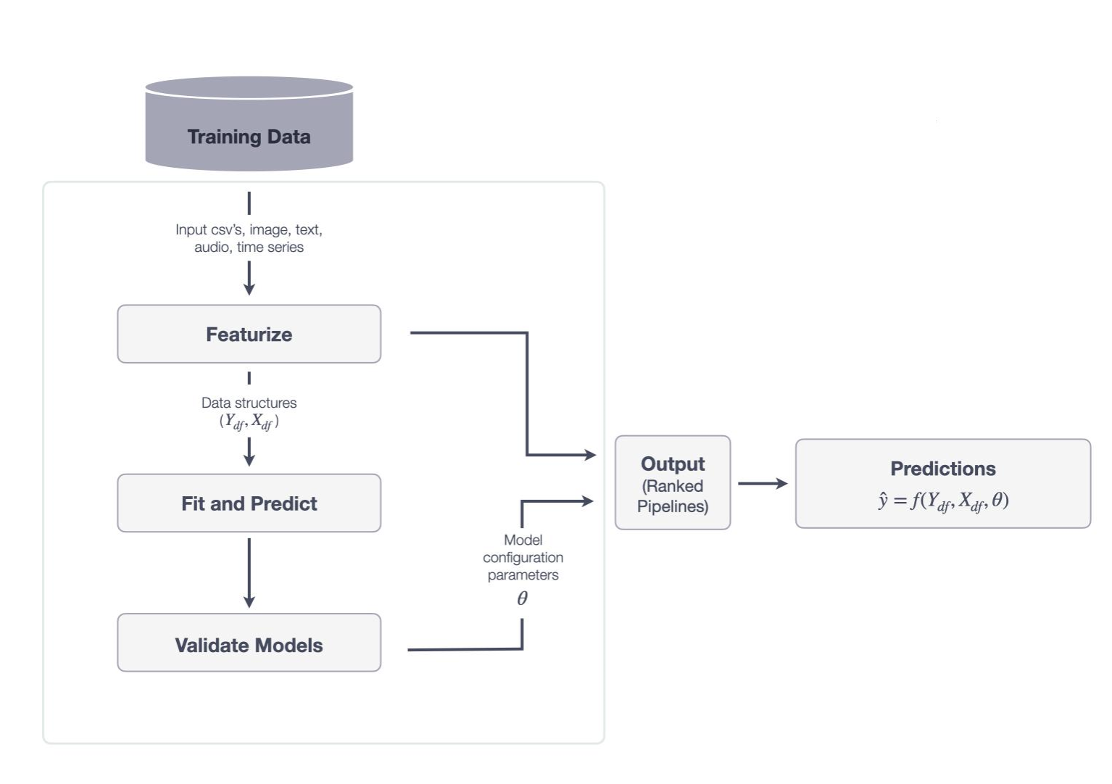

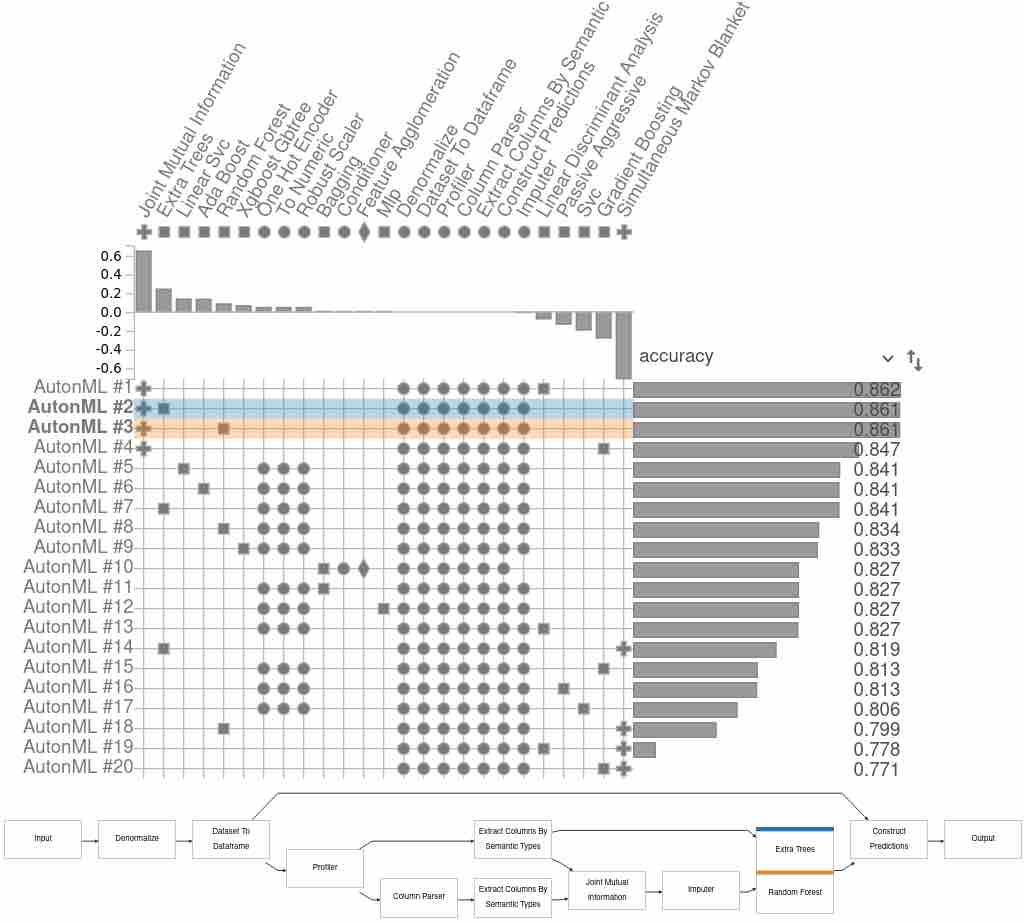

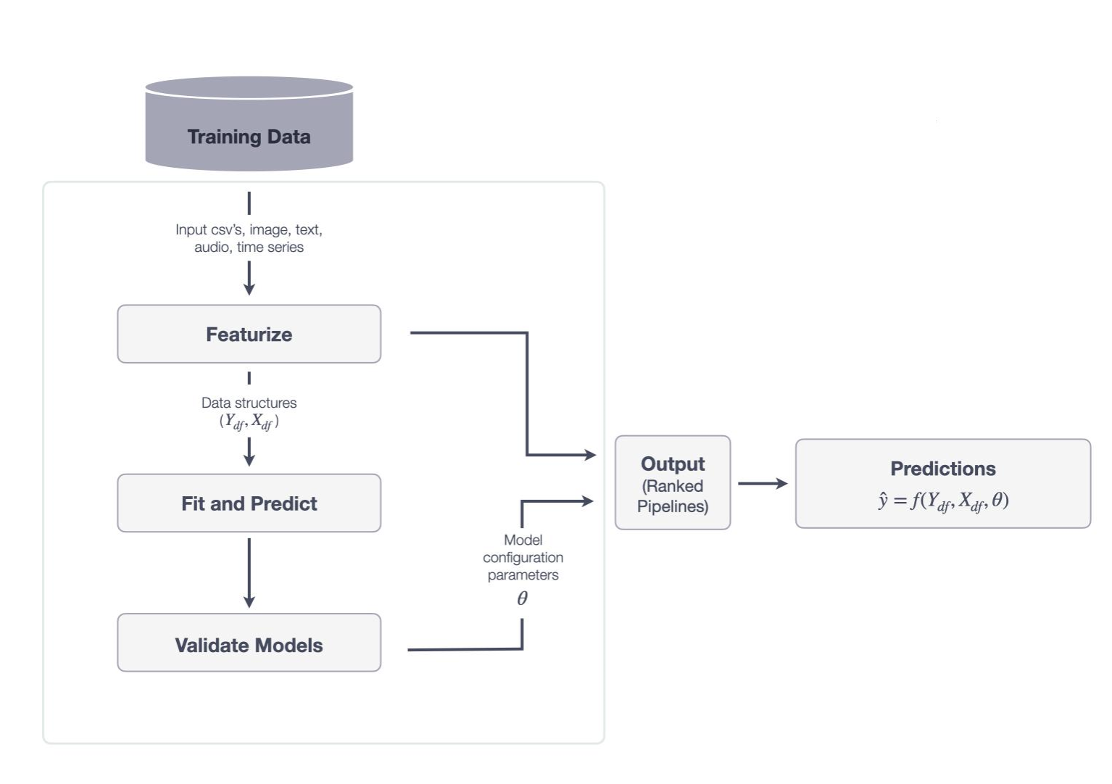

Automated Machine Learning

AutonML augments the capacity of Data Scientists by automating searches for plausible modeling process designs. It can help address shortages of qualified personnel and boost productivity of current staff by automatically learning what is learnable from data. Increasingly, state-of-the-art AI systems require infrastructure which makes their application limited to organizations with vast amounts of resources. Auton Lab works to make these data-hungry learning paradigms more efficient so that our models may be applied to contexts where abundance of data is not easily obtained, allowing those who would otherwise be left behind to unlock the promise of AI in their critical contexts. Auton Lab also works to automate much for the process of cleaning data, discovering learnable parameters, training models, and tuning hyperparameters. Such work reduces the need for experienced data scientists to be involved in the model development process, and gives more agency to people who want to use AI in effective ways.

Distributed AI

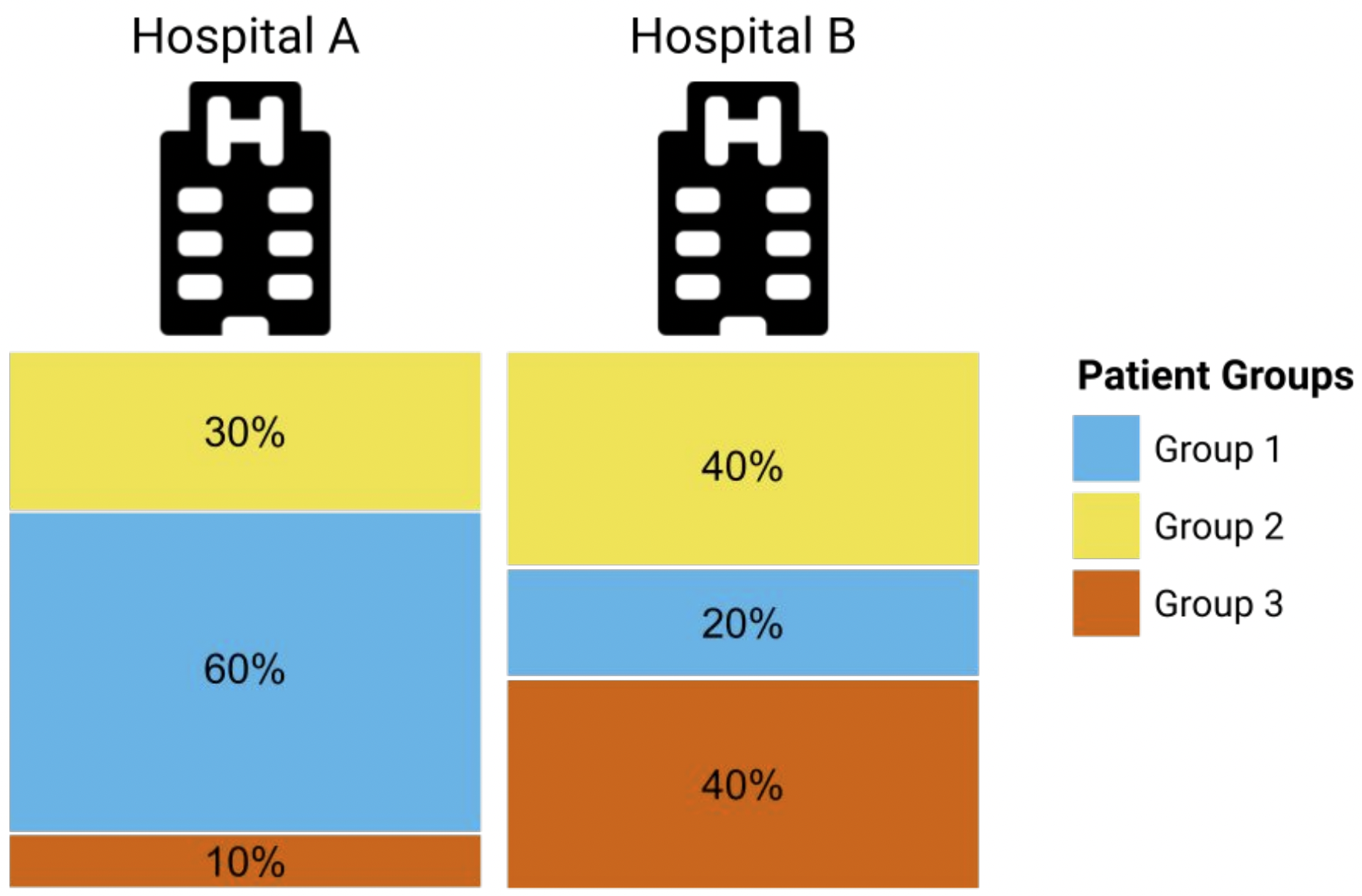

Federated Learning supports machine learning in a distributed manner, by learning on local data and updating global model parameters. Federated Learning is particularly applicable in cases where local datasets either have ownership and privacy considerations or the sheer amount of data is not supportive of centralized model learning. Distribution of compute resources to where the data resides also allows local models to learn idiosyncratic structure from each locality, complementing the structure that emerges in the global model.

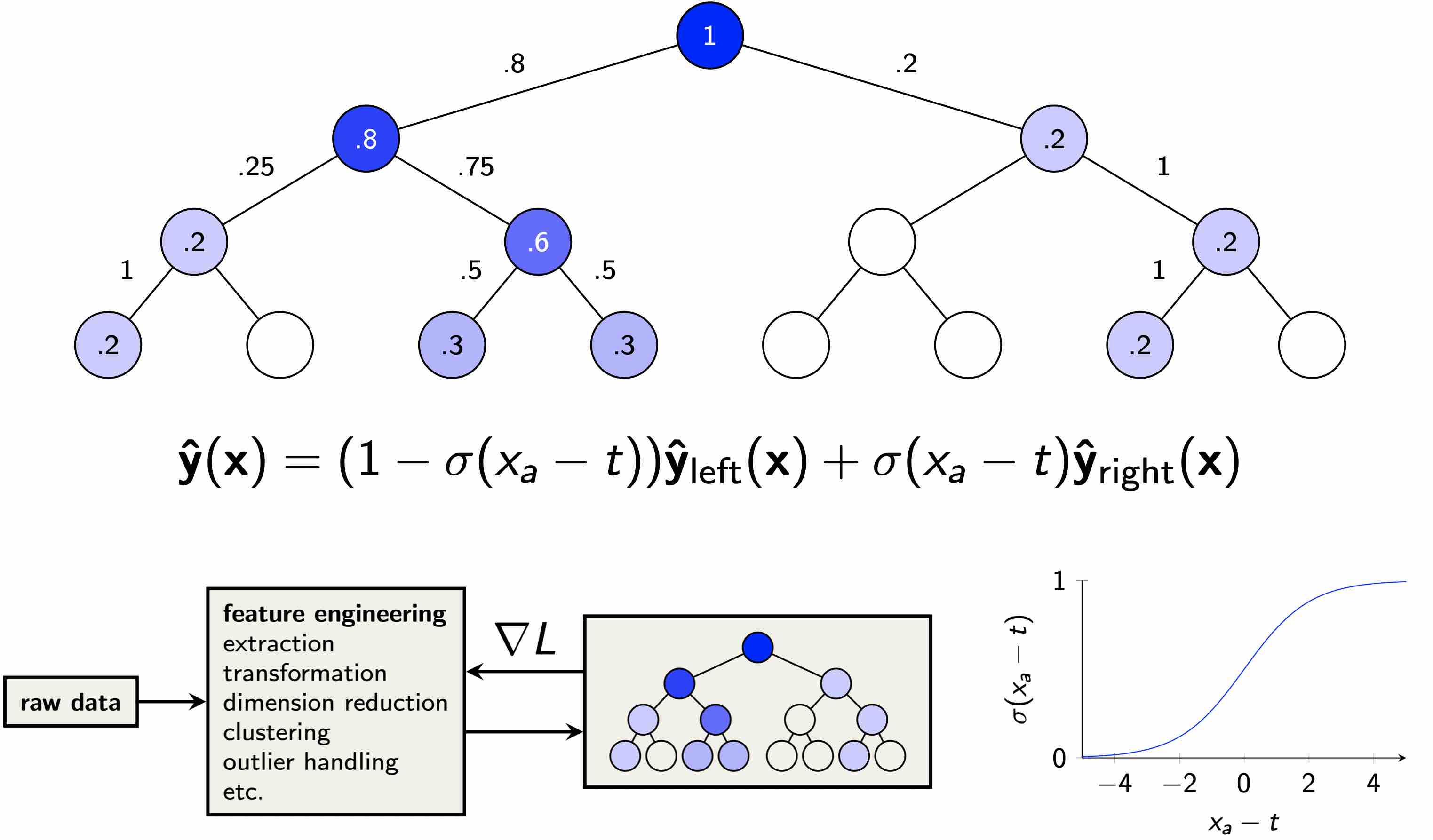

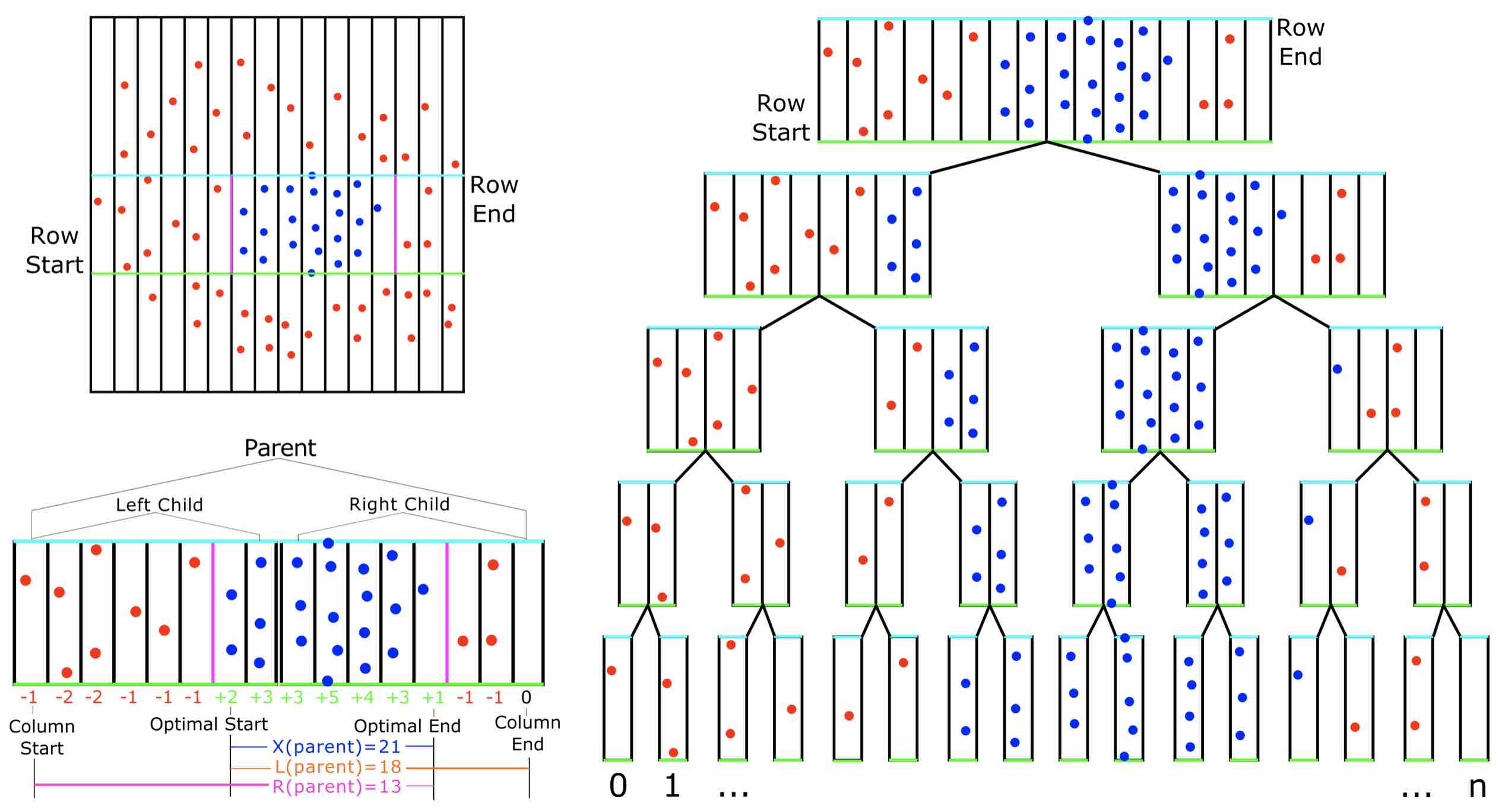

Efficient Data Structures and Learning Algorithms

Intelligent data structures can support fast queries for information that may otherwise take a long time to compute, such as temporal scans and robustness guarantees. Reducing the resource cost of deep learning algorithms through efficient sampling and by replacing internal network structure with more light-weight alternatives, such as fuzzy decision trees. Adaptive resolution temporal scans to answer user queries. Disjunctive anomaly detection.

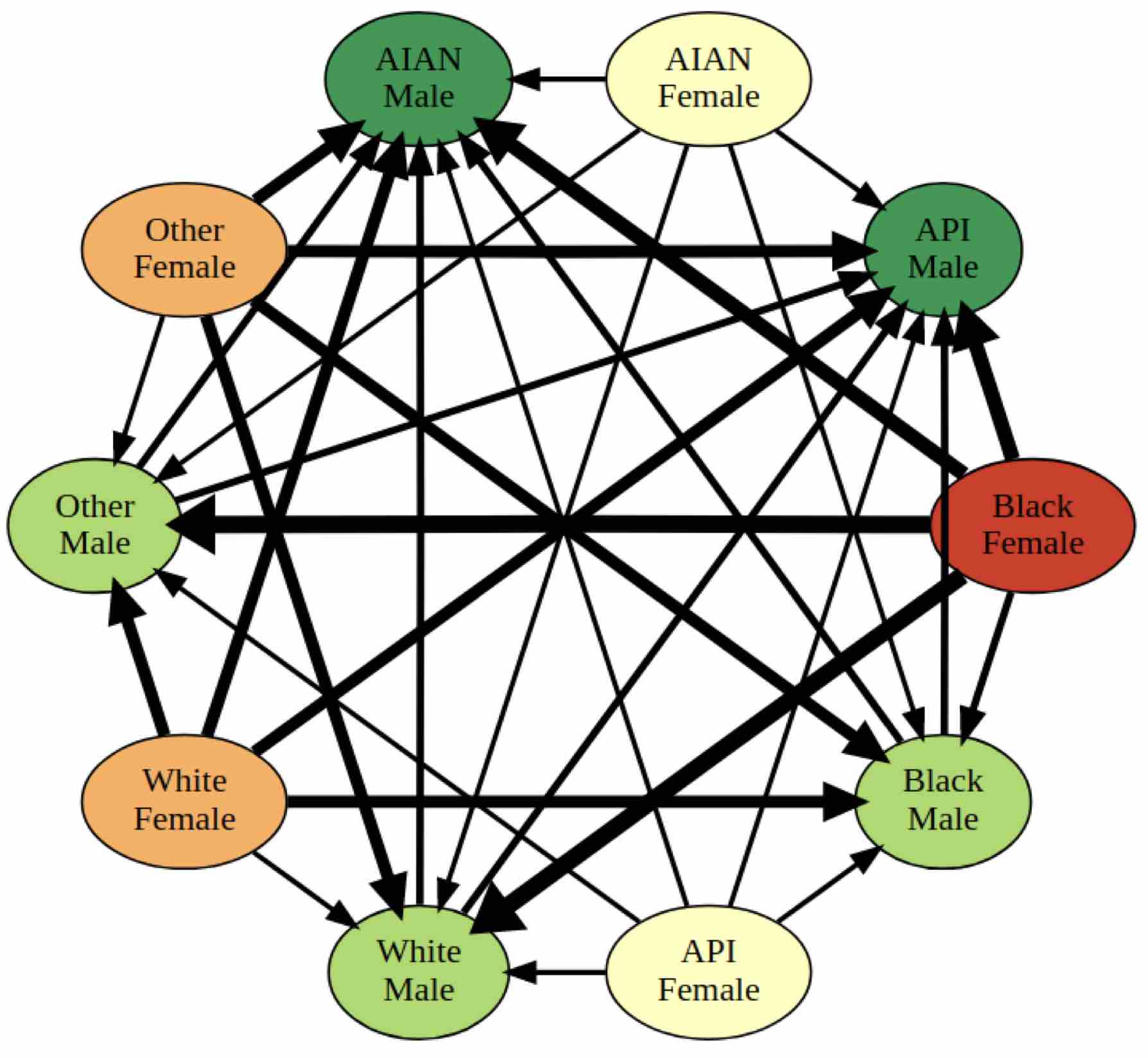

Explainable & Trustworthy AI

If understanding, performance, and trust are integral to the adoption of AI in new, mission-critical fields, a model's inability to rationalize its behavior is rate-limiting. If users cannot supervise AI systems, there is a non-trivial chance that AI will inflict otherwise easily preventable harm to humans. Auton Lab develops a variety of tools which are intended to give the developers of AI systems a better understanding of what their models actually learn. In developing AI systems for subject matter experts (SMEs), it is important to demonstrate that AI learns a policy that resonates with human decision making in easy-to-adjudicate cases. Proving that AI has learned 'common sense' patterns in data requires that our systems be both explainable and interrogable. Common sense properties of AI systems may include algorithmic fairness in applications that deal with human data. Auton Lab develops methods for determining whether a trained model exhibits unfair bias, which not only informs decision making at model deployment, but also in a streaming manner as deployed models make new predictions.